This tutorial explains how you can build a scenario that captures user’s submissions about the quality of products and services your company provides. It collects overall satisfaction, and it allows the user to type a comment, in his native language.

It follows the Youtube video called “No voice goes unnoticed: Build an AI customer feedback automation in Make”. You can find it here.

You can learn more about using the Make AI Toolkit , the Context Extractor here, or learn about the Make AI Agent here . Both courses are part of our Automation to AI Agents: Foundation learning path.

For this use-case, we used Jotform to build the form and to collect feedbacks. It’s well integrated with Make and allows to automatically start a scenario when someone submits the form.

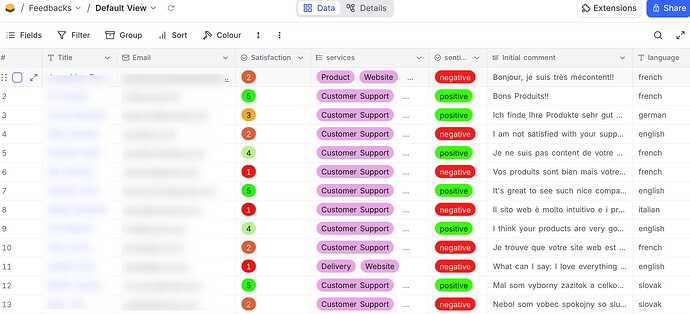

The target system is a database. We used NocoDB, built a table that allows to store all information picked from the survey form.

We also used Slack to post messages to the Customer Service when a feedback is negative.

Let’s see the scenario and the different steps you need to perform if you want to build the same or an equivalent.

Blueprint:

Received Feedback submissions.blueprint.json (44.9 KB)

You can pick the scenario blueprint and import it into your Make Organization. But you will need more if you want to make it work properly:

- a Jotform account, and a form

- a Slack account

- a Nocodb account

Because of all these prerequisites, it may be difficult to configure exactly what is required. The goal of this article is to help you get an idea about what the final use-case looks like, so that you get inspiration for your own implementation.

Here’s a breakdown of each step

Jotform / Watch for Submissions

This trigger module is associated with a Make Webhook, called when a user submits new information in the “Feedback Survey Form”. It’s immediately called, with all the data the user typed in the form. In the subsequent modules, we leverage this data.

Make AI Toolkit / Analyze sentiment

We use this module to analyze whether the text the user typed is positive, negative or neutral. It will help later in the scenario to decide whether we warn Customer Service (if it’s negative), or not.

Make AI Toolkit / Identify language

In this use-case, we have allowed the user to type text in his native language. The text will be translated to english, and we want to keep track of the initial language, so that any follow-up message is translated back to the user’s language.

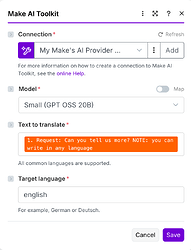

Make AI Toolkit / Translate text

In this module we translate the text of the user to english. The module doesn’t need the initial language, it detects it alone.

Make AI Toolkit / Ask anything

This module, and the next one, is called only when the text of the user is negative, or his overall satisfaction is lower than 3. We use it to ask AI to summarize the text, analyze the satisfaction and service selection, and propose a follow-up to the customer service team.

Slack / Create a Message

Used to send a slack message with all the information about the user, his feedback, and the recommendations generated by the Make AI Toolkit / Ask anything module.

NocoDB / Create a Record

In this module, we store all information about the user’s feedback, including the following:

- Initial language.

- Translated text.

- Sentiment.

As you can see, the scenario is quiet straightforward. It allows to see how powerful and yet easy to use the Make AI Toolkit modules are. You can try to implement an equivalent use-case, and let your imagination flow!

And again, you can learn more about using the Make AI Toolkit , the Context Extractor here, or learn about the Make AI Agent here . Both courses are part of our Automation to AI Agents: Foundation learning path.